Mark had a breakthrough idea – an AI-driven hiring assistant that could predict a candidate’s success in a company based on past hiring data. The concept was solid, investors showed interest, and his team jumped into development. They worked tirelessly for the next 18 months: training complex models, refining algorithms, and building a polished, full-fledged application.

When the product finally launched, reality hit hard. The AI struggled with accuracy, users found it too rigid, and early adopters abandoned it within weeks. Worse, companies hesitated to trust hiring decisions entirely to AI. Mark had burned through funding, spent over a year in development, and was left with an expensive product that no one wanted.

His mistake? Skipping the Minimum Viable Product (MVP AI) phase.

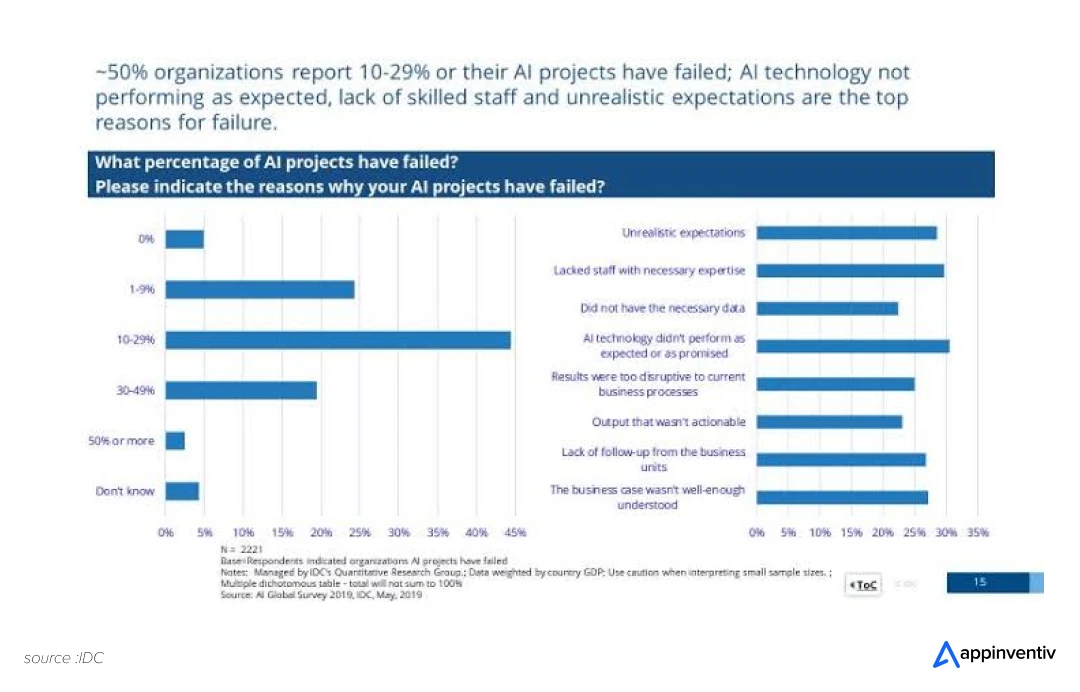

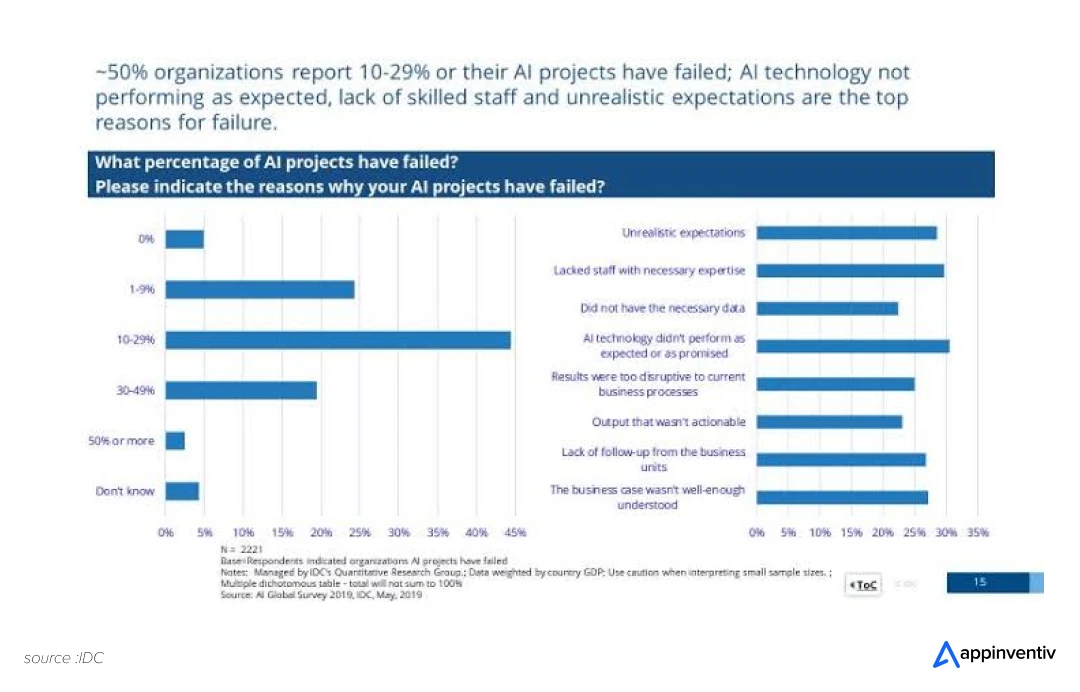

Many AI entrepreneurs fall into the same trap – pouring resources into full-scale products without testing whether real users find them valuable. Unlike traditional software, AI products rely on evolving models, data feedback loops, and iterative improvements. Launching without validation often leads to failure.

So, how do you avoid Mark’s mistake? How do you build an AI MVP that proves your concept before investing heavily? Let’s break it down.

The Benefits of Building an AI MVP that Prioritizes Going Minimal-First

Unlike traditional software, where features work as expected once coded, AI products learn, adapt, and evolve – and that unpredictability makes skipping a Minimum Viable Product (MVP) a dangerous gamble. Many AI entrepreneurs assume their models will work perfectly in real-world scenarios, only to realize too late that their technology isn’t solving the problem as expected.

An MVP AI is the best way to test assumptions, refine AI performance, and ensure market fit before making heavy investments. Here are some of the reasons defining the importance of an AI MVP:

AI Models Are Unpredictable in the Real World

Even the most intelligent AI models trained on high-quality datasets can fail unexpectedly. A fraud detection system might flag too many legitimate transactions, an AI medical assistant could struggle with rare conditions, or an autonomous driving model might misinterpret real-world signals.

These failures aren’t just bugs but fundamental problems requiring ongoing refinement. An MVP AI helps businesses test the model in real-world conditions, gathering crucial feedback before committing to a full-scale launch.

Data Quality and Availability Can Make or Break AI

AI is only as good as the data it learns from. Many startups assume they have the right datasets, only to discover later that the data is biased, insufficient, or constantly changing. A customer service AI might fail to handle diverse accents, a legal AI tool may struggle with jurisdictional differences, or an AI-powered recruitment platform may unknowingly favor certain demographics.

An AI Minimum Viable Product (MVP) helps uncover these challenges early. Instead of waiting until the final product is built, companies can test whether their AI can learn effectively from available data or if they need better data sources, preprocessing strategies, or even a pivot in approach.

User Behavior Often Defies Expectations

AI-driven products are built based on hypotheses about how users will interact with them. But real-world usage often tells a different story.

- A chatbot may struggle with human-like conversations, leading to frustrating interactions.

- A recommendation engine might suggest irrelevant content, causing users to disengage.

- An AI-powered intelligent automation for enterprises might feel intrusive rather than helpful.

These problems can go unnoticed without early testing until it’s too late. AI MVP development allows AI companies to observe user behavior, collect feedback, and refine their models before committing to full-scale development.

Cost and Development Time Spiral Without Early Validation

AI is resource-intensive. From training deep learning models to refining them with real-world data, the costs increase quickly, both in computational power and development time. Startups that rush into full-scale AI development without validating their core idea risk sinking months (or even years) into a product that fails to deliver value.

A custom MVP development AI strategy prevents this by:

- Identifying feasibility issues early, before they become costly mistakes.

- Avoid unnecessary features that don’t contribute to real user value.

- Ensuring AI performance is strong enough before investing in large-scale infrastructure.

Instead of spending millions on perfecting an AI model upfront, companies can launch an MVP to test a lean version of their AI solution, iterate based on results, and optimize investments wisely.

Investors and Stakeholders Want Proof, Not Promises

AI is a competitive space, and attracting investors, partners, or early adopters requires more than an idea- proof of viability. One of the other benefits of building an AI Minimum Viable Product (MVP) is that it allows startups to demonstrate traction with real-world users, showing measurable impact rather than theoretical potential. Investors are far more likely to fund an AI company with:

- A working prototype that solves a clear problem.

- Initial user adoption and feedback.

- Early validation of AI accuracy and effectiveness.

Instead of pitching a concept on slides, AI entrepreneurs can present real-world results, making fundraising, partnerships, and go-to-market efforts significantly easier.

The importance of an AI MVP helps companies reduce uncertainty, refine their models, and validate market demand before making heavy investments.

In the next section, we’ll explore the different facets of AI MVP development.

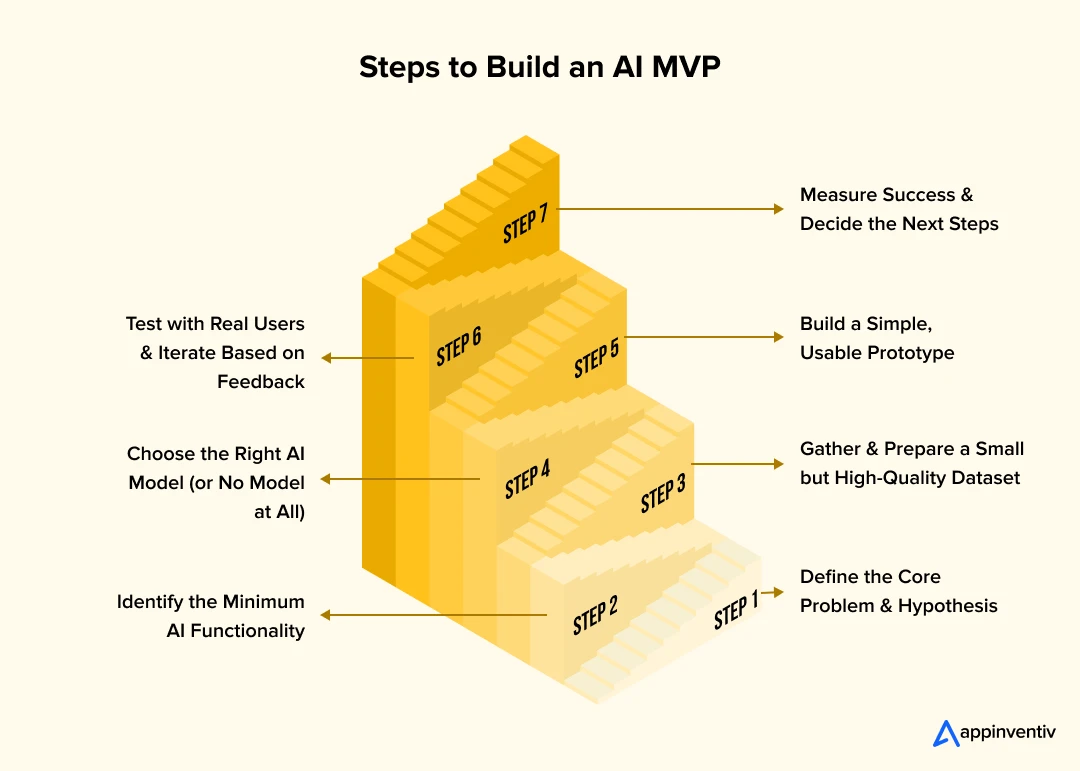

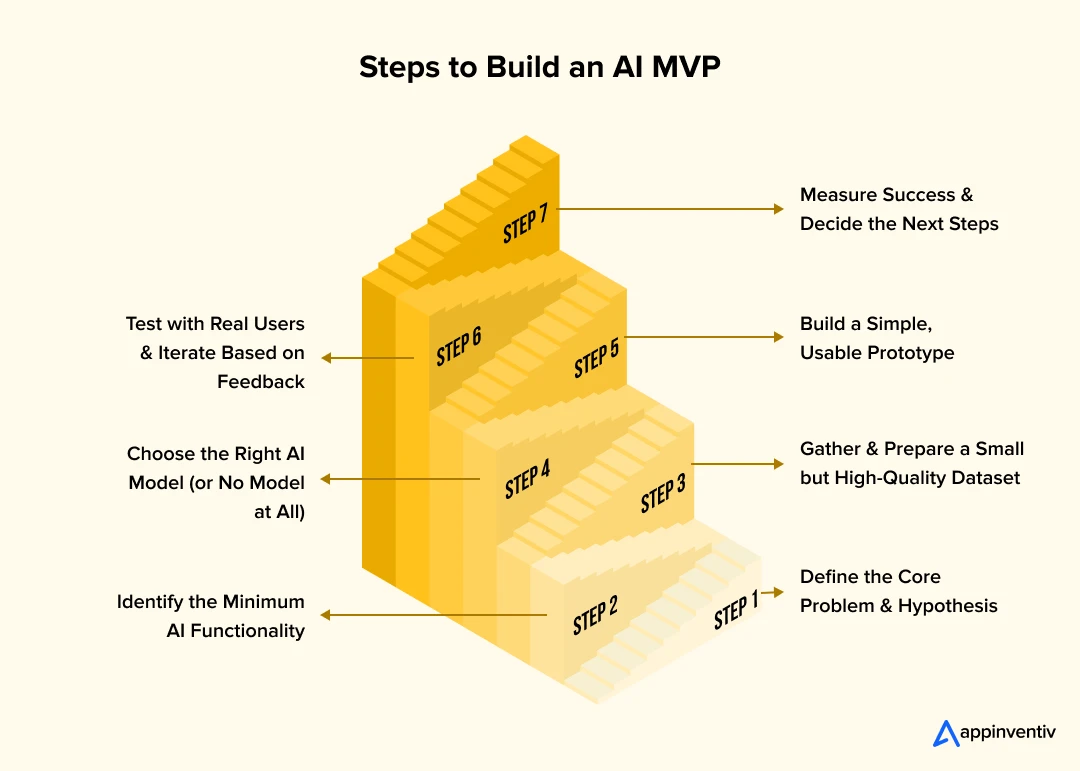

The Step-by-Step Guide on How to Build an AI MVP

The answer to how to build an AI MVP lies in a structured approach that balances technical feasibility, user validation, and cost efficiency. Unlike traditional MVP in software development, AI models depend on data, iterative training, and real-world feedback. Here’s a step-by-step guide to building a custom MVP development AI that proves your concept before full-scale development.

Step 1: Define the Core Problem and Hypothesis

AI should solve a specific, well-defined problem, not just be an impressive piece of technology. Before writing a single line of code, ask:

- What real-world problem does this AI solve?

- Who are the target users, and how do they currently solve this problem?

- How will AI improve upon existing solutions?

At this stage, many entrepreneurs make the mistake of trying to build an AI model that is too complex for an MVP. Instead, focus on a hypothesis: “If we apply AI to the X problem, it will improve the Y outcome.”

Example: A startup wants to build an AI-powered resume screener. The MVP hypothesis could be: “An AI model trained on past hiring data can shortlist candidates 30% faster than manual screening.”

Step 2: Identify the Minimum AI Functionality

An AI MVP doesn’t need to be fully automated or feature-rich. Instead, focus on one critical AI-driven functionality that proves feasibility.

- What’s the simplest AI-powered feature that demonstrates the product’s value?

- Can a rule-based or semi-automated approach work initially?

Example: Instead of developing an end-to-end AI hiring platform, the MVP might be a simple resume-scanning algorithm that ranks candidates based on keywords and experience.

Pro Tip: Many successful AI startups use human-in-the-loop models in their MVP. If AI predictions are inaccurate, a human corrects them, providing real-time feedback to improve the model.

Step 3: Gather and Prepare a Small but High-Quality Dataset

AI models depend on data, but collecting large datasets for an MVP can be unnecessary and expensive. Instead, in this development process of an AI MVP, the focus should be on:

- Using open-source datasets if available.

- Starting with a small, high-quality dataset instead of a massive but noisy one.

- Considering synthetic data or manual data labeling for early training.

Example: For the AI hiring assistant, the startup can use 1000 manually screened resumes instead of trying to collect data from multiple companies upfront.

Avoid This Mistake: Many AI startups

assume they need millions of data points to train an MVP. In reality, a well-curated small dataset can be more effective for initial validation.

Step 4: Choose the Right AI Model (or No Model at All)

Not every AI MVP development process requires a deep learning model from day one. Depending on the problem, simpler techniques may work just as well:

- Rule-based algorithms – If the task is predictable and structured.

- Traditional machine learning – If patterns can be extracted from small datasets.

- Pre-trained AI models – To avoid building from scratch.

- No AI at all – If manual processes can mimic AI for early testing.

Example: Instead of training a complex deep learning model for resume screening, the startup can use a basic keyword-matching algorithm as an MVP.

Pro Tip: Many successful AI startups fake AI in their MVP – using humans behind the scenes to validate whether AI automation is truly needed before investing in it.

Step 5: Build a Simple, Usable Prototype

The steps to building an AI MVP should be packaged into a basic but functional interface – even if it’s just a web form, chatbot, or API. The goal is not to impress users with design but to test core AI functionality.

Example: Instead of a fully built AI hiring dashboard, the startup can create a simple web page where users upload resumes and receive AI-generated rankings via email.

Key Takeaway: Your MVP AI doesn’t need a polished UI, just enough to prove that the AI solves the problem efficiently.

Step 6: Test with Real Users and Iterate Based on Feedback

Once the MVP is ready, test it with early adopters or beta users to collect feedback:

- Does the AI provide useful insights?

- Are the predictions accurate and relevant?

- What’s missing, and where does AI struggle?

At this stage, errors and inaccuracies are expected – but that’s the point. Instead of guessing improvements, companies can refine the AI based on real-world feedback.

Example: If recruiters find the AI hiring assistant’s rankings unreliable, the startup can adjust its algorithm, collect more data, or fine-tune its criteria.

Step 7: Measure Success and Decide the Next Steps

The MVP should prove or disprove the AI hypothesis. If the results are promising, the next steps may include:

- Scaling the AI model with more training data.

- Automating manual processes that were used as placeholders.

- Refining the UI/UX based on user feedback.

- Seeking investor funding with real MVP results.

Example: If the AI hiring Minimum Viable Product (MVP) successfully ranks candidates 30% faster and increases recruiter efficiency, the startup can expand the AI’s capabilities, perhaps adding bias detection or deeper skill analysis.

Reality Check: If the MVP proves that AI isn’t the right solution, that’s still a win; it prevents unnecessary investment in a flawed concept.

Bonus Read: 21 Solid Ways to Validate Your Minimum Viable Product

AI MVP development isn’t just about launching fast – it’s about testing, learning, and iterating. Many AI startups fail because they over-engineer before validating their ideas. By following this step-by-step development process of an AI MVP, companies can reduce risk, optimize resources, and develop AI solutions that truly work in the real world.

How Much Does it Cost to Build an AI MVP?

Developing an AI MVP isn’t just about cutting costs but maximizing impact with minimal investment. The total cost, just like in the scenario where we use AI solutions in MVP development, depends on multiple factors, including the complexity of the AI model, data availability, infrastructure needs, and development team composition. Let’s break it down.

Key Cost Factors for an AI MVP

Data Collection and Preparation ($0 – $50,000)

Data is the foundation of any AI model, but acquiring and preparing it can be expensive. Costs depend on whether you’re:

- Using open-source datasets (Free)

- Manually collecting and labeling data ($2,000 – $20,000)

- Purchasing proprietary datasets ($10,000 – $50,000)

AI Model Development ($5,000 – $100,000)

The complexity of your AI model impacts development costs.

AI Infrastructure and Cloud Costs ($500 – $30,000)

Training AI models requires high-performance computing resources, which can be expensive.

- Local training on a basic server ($500 – $5,000)

- Cloud-based AI services (AWS, Google Cloud, Azure) ($5,000 – $20,000)

- Enterprise-grade AI infrastructure ($20,000 – $30,000)

MVP Development (Frontend & Backend) ($10,000 – $50,000)

You’ll need a minimal interface to test user interactions, even with strong AI functionality.

- Basic web app or API ($10,000 – $20,000)

- Mobile app or interactive dashboard ($20,000 – $50,000)

Team and Talent Costs ($15,000 – $100,000+)

Building an AI MVP requires a skilled team, including:

- AI/ML engineers ($80 – $200/hour)

- Backend developers ($60 – $150/hour)

- Frontend developers ($50 – $120/hour)

- Data scientists ($90 – $200/hour)

Estimated Total Cost Breakdown

| AI MVP Component |

Total Cost Estimate |

| Data gathering and preparation |

$0 – $50,000 |

| AI model development |

$5000 – $100,000 |

| Cloud and infrastructure |

$500 – $30,000 |

| MVP development |

$10,000 – $50,000 |

| Talent and team cost |

$15,000 – $100,000 |

| Total estimated cost |

$30,000 – $150,000 |

Budget MVP AI Option ($30,000 – $50,000)

- Use open-source data & pre-trained AI models

- Keep the interface minimal (basic web form or API)

- Use freelancers or AI agencies instead of full-time staff

Enterprise MVP AI Option ($100,000 – $250,000)

- Collect custom, high-quality data

- Build a proprietary AI model

- Develop a polished web/app interface with full automation

How to Minimize the Cost to Build an AI MVP Without Sacrificing Quality

- Start with a No-Code MVP. Use platforms like Bubble, Zapier, or Google Vertex AI to create prototypes before writing complex code.

- Leverage Open-Source AI Models. Instead of training a model from scratch, fine-tune GPT, BERT, YOLO, or other pre-built models.

- Validate Before Scaling. Test AI predictions manually, using a human-in-the-loop approach before committing to full automation.

- Outsource Wisely. Instead of hiring an in-house team, work with freelancers or AI-focused development agencies for early-stage development.

- Use Pay-As-You-Go Cloud Services. Platforms like AWS, Google Cloud, and Azure allow startups to pay only for the compute resources they use.

An AI MVP doesn’t have to cost millions. With lean strategies, pre-trained AI models, and cloud-based services, startups can build and validate a Minimum Viable Product (MVP) AI for as little as $30,000, or scale up depending on complexity.

Challenges in AI MVP Development

We’ve seen countless AI startups race to launch their MVPs, only to hit unexpected roadblocks. Some struggled with unreliable data, while others underestimated infrastructure costs. A few built AI models that looked great in testing but completely failed in real-world scenarios.

The truth is, the answer to how to build an AI MVP isn’t just about writing code and launching fast – it requires carefully navigating challenges that can make or break a product. Over time, we’ve helped companies overcome these hurdles, and here’s what we’ve learned.

Data Collection: The First (and Often Biggest) Obstacle

AI models are only as good as the data they’re trained on. Many startups either don’t have enough data or unknowingly use biased datasets, leading to poor predictions.

What We’ve Seen: A startup we worked with trained its AI model using a small dataset that didn’t represent real-world conditions. The result? Their AI completely failed in production.

How We Solve It: We start with open-source datasets or help teams collect a small but high-quality dataset. Rather than aiming for massive volumes of data upfront, we focus on data diversity and proper labeling, ensuring the AI model learns from the right examples.

The Accuracy vs. Cost Dilemma

High-performing AI models don’t come cheap. Startups often pour money into training custom models when they could achieve similar results using existing ones.

What We’ve Seen: A company spent months (and tens of thousands of dollars) training an AI model from scratch, only to discover that a fine-tuned pre-trained model could achieve the same results at a fraction of the cost.

How We Solve It: Instead of reinventing the wheel, we leverage pre-trained models (like GPT, BERT, or YOLO) and fine-tune them with business-specific data. This approach reduces development time and costs while delivering solid accuracy.

Computing Costs Can Spiral Out of Control

AI training requires GPU-based cloud computing, which can quickly become expensive if not managed properly.

What We’ve Seen: A startup unknowingly ran an AI model training on high-end cloud servers without optimizing resource usage, leading to unexpected infrastructure costs in the tens of thousands.

How We Solve It: We help teams optimize their cloud spending using on-demand AI services, auto-scaling instances, and efficient batch processing. This ensures they only pay for what they need.

AI Models That Work in Testing But Fail in the Real World

An AI model might perform exceptionally well in controlled test environments but completely break down when faced with real-world variability.

What We’ve Seen: A company built an AI-powered recommendation system that worked flawlessly in lab conditions but gave bizarre suggestions to real users. The issue? Their training data didn’t reflect actual user behavior.

How We Solve It: We emphasize continuous testing with real user data, ensuring that models generalize well beyond training conditions. We also implement feedback loops to retrain and improve AI performance over time.

AI Trust and Explainability

Users don’t just want AI predictions; they want to understand why the AI made a certain decision.

What We’ve Seen: An AI-driven hiring tool we worked on showed selection bias. The issue? The model learned patterns from biased historical data, and its decision-making process was completely opaque.

How We Solve It: We incorporate Explainable AI (XAI) techniques that show users how decisions are made. This builds trust and helps businesses identify and correct AI biases before they become problematic.

Scaling from MVP to a Full AI Product

A Minimum Viable Product (MVP) is just the beginning – scaling AI into a fully operational, revenue-generating product comes with challenges.

What We’ve Seen: Startups that rushed into full development without testing their business model often struggled to scale. Some faced bottlenecks in data processing, while others realized their AI models couldn’t handle real-world demand.

How We Solve It: We take an iterative approach, validating the AI MVP in small, controlled environments before scaling up. We also ensure that data pipelines, models, and infrastructure are built for long-term scalability.

Bonus Read: How to Build a Minimal Viable Product and Secure Funding

We’ve seen firsthand how AI startups can waste time and money on avoidable mistakes. The key is identifying challenges in AI MVP development early, validating AI performance with real users, and keeping infrastructure costs lean.

Is Your AI MVP Ready to Scale?

Challenges are only one piece of the puzzle of how to build an AI MVP. The next, equally critical aspect is growing your Minimum Viable Product (MVP). Scaling too early can drain resources, while scaling too late can mean missing market opportunities. So, how do you know when the time is right?

| Checklist of AI MVP: Ready or Not Ready |

| Ready To Scale |

Not Ready |

| Model: High accuracy, stable, minimal drift |

Next Step: Expand data, optimize for scale |

Model: Unpredictable, erratic outputs |

Fix: Refine data, test broadly |

| Users: Strong retention, growing engagement |

Next Step: Add features, integrations |

Users: Low retention, poor UX |

Fix: Improve AI, test engagement |

| Market: Free users pay, demand rises |

Next Step: Boost sales and marketing |

Market: No revenue, high costs |

Fix: Test pricing, value prop |

| Infra: Handles growth, cost-efficient |

Next Step: Prep for high demand |

Infra: Crashes, slow under load |

Fix: Optimize, test traffic |

| Edge: Solves unmet need, beats rivals |

Next Step: Scale while staying unique |

Edge: Lacks standout value |

Fix: Sharpen niche, features |

Ready to Scale: Your AI Model Delivers Consistently

- The AI achieves high accuracy in real-world conditions, not just in testing.

- False positives and false negatives are within an acceptable range.

- Performance remains stable over time, with no major signs of model drift.

Next Step: Expand your dataset and optimize the model for larger-scale deployment.

Not Ready: Model Performance is Unpredictable

- Accuracy fluctuates significantly when exposed to new data.

- Users report erratic or incorrect AI outputs.

- The model requires frequent manual retraining to stay effective.

Fix It: Identify weak points in training data, fine-tune the model, and test with a broader dataset before scaling.

Ready to Scale: Users Actively Engage with the AI

- Retention rates are strong – users keep coming back.

- Usage frequency is steadily increasing.

- Customer feedback indicates trust in the AI’s decisions.

Next Step: Introduce advanced features or integrations to deepen engagement.

Not Ready: Users Drop Off Quickly

- The AI gets high initial interest, but retention is low.

- Users frequently override AI suggestions or disengage.

- Feedback highlights usability issues or a lack of real value.

Fix It: Improve UX, refine AI interactions, and run A/B tests to identify what keeps users engaged before expanding.

Ready to Scale: The Market is Willing to Pay

- Free users are converting into paying customers at a promising rate.

- There is steady inbound demand and a growing sales pipeline.

- Customer lifetime value is higher than customer acquisition costs.

Next Step: Expand go-to-market strategies and invest in scaling sales and marketing.

Not Ready: No Clear Path to Revenue

- Users engage with the free version but hesitate to upgrade.

- There’s interest in the product, but little willingness to pay.

- Acquisition costs outweigh revenue potential.

Fix It: Validate pricing models, refine the value proposition, and experiment with monetization strategies before scaling.

Ready to Scale: Infrastructure Can Handle Growth

- Cloud costs are optimized, and resource usage is under control.

- Data pipelines can process increasing volumes without major slowdowns.

- Response times remain consistent as user numbers grow.

Next Step: Prepare for higher demand by ensuring redundancy, auto-scaling, and efficient data handling.

Not Ready: System Crashes Under Increased Load

- The AI performs well in controlled environments but struggles with real-world usage.

- Cloud costs become unsustainable as more users interact with the AI.

- The system suffers from latency issues and slow response times.

Fix It: Optimize infrastructure, introduce caching mechanisms, and test with simulated high traffic before expanding.

Ready to Scale: Your AI Has a Competitive Edge

- The product solves a real, unmet need in the market.

- Competitor analysis shows the solution is superior or more efficient.

- Early adopters provide strong word-of-mouth marketing.

Next Step: Scale strategically while maintaining differentiation.

Not Ready: Your AI Doesn’t Stand Out

- Competitors offer better or more cost-effective solutions.

- The AI lacks a clear, unique selling point.

- Users see it as a “nice to have” rather than an essential tool.

Fix It: Focus on refining the niche, improving core features, and clarifying market positioning before pushing for growth.

How Appinventiv Helps AI Startups Build and Scale Their MVP

Bringing an AI product to market is complex. From ensuring the model works in real-world conditions to handling infrastructure challenges, many startups struggle to balance speed, efficiency, and scalability. But at Appinventiv, we’ve helped AI-driven startups navigate these hurdles, ensuring their MVPs are functional and ready for growth.

Our approach to MVP artificial intelligence solutions is tailored to each product’s unique needs. We focus on building lean yet powerful AI models that deliver real value from day one. Instead of overcomplicating the initial build, we refine the core AI functionality, ensuring it performs consistently across diverse data sets. This allows startups to validate their AI’s effectiveness early on, without investing in unnecessary features that could slow development.

Moreover, when someone asks us how to build an AI MVP, we usually answer: we emphasize scalability from the start. Many MVPs face performance bottlenecks when transitioning from pilot users to larger-scale adoption.

To prevent this, we design cloud-first architectures that efficiently handle increased data loads. Our teams optimize infrastructure, integrate auto-scaling mechanisms, and fine-tune the AI pipelines to ensure stability as user demand grows.

Beyond technical execution, we support AI startups in making data-driven decisions about when and how to scale. Many companies struggle with premature scaling, leading to high costs without strong user retention.

We analyze key indicators such as model performance, user engagement, and market demand to determine the right time for expansion. If the AI isn’t delivering the expected value, we refine the product before investing in growth.

With a proven track record of helping AI startups launch and scale successfully, our Appinventiv team ensures that AI MVPs don’t just make it to market but thrive in competitive landscapes.

FAQs.

Q. How long does it take to build an AI MVP?

A. The timeline for developing an AI MVP varies based on complexity, data availability, and model training requirements. On average, building a functional AI MVP takes 3 to 6 months. The timeline can be shorter if high-quality, labeled data is readily available and the AI model is straightforward. However, development may take longer if extensive data collection, preprocessing, or iterative model tuning is needed.

Q. How to build an AI MVP?

A. Building an AI MVP involves several key steps:

- Define the problem and the AI use case

- Validate the idea

- Prepare the data

- Develop a lightweight AI model

- Build the MVP prototype

- Test and refine

- Launch and measure impact

Q. How much does it cost to build an AI MVP?

A. The cost of developing an AI MVP depends on factors like AI complexity, dataset availability, model training needs, and cloud infrastructure. On average, AI MVP development costs range from $40,000 to $150,000. Simpler AI solutions with pre-trained models or basic automation features fall on the lower end. AI MVPs requiring custom deep learning models, high data processing, or extensive backend development can be more expensive.

Q. What is the difference between an AI MVP and a traditional Minimum Viable Product (MVP)?

A. An AI MVP differs from a traditional MVP in several ways:

- Data Dependency – AI MVPs require large, high-quality datasets for training, while traditional MVPs rely more on functionality and user experience.

- Iterative Learning – AI models continuously improve with more data, whereas traditional MVPs focus on refining features based on user feedback.

- Development Complexity – AI MVPs involve model training, validation, and optimization, making development more intricate than rule-based or static-functionality MVPs.

- Scalability Considerations – AI MVPs must account for growing data processing needs, while traditional MVPs scale primarily based on user growth and feature expansion.

IT Managed & Outsourcing

Didn't find what you're looking for? Let us know your needs, and we'll tailor a solution just for you.